Making The Most of a Rewrite

Rewriting an app can be a long and daunting process, but it has a number of advantages over making in-place improvements to your codebase. A rewrite allows you to rethink your application architecture at large, with the experience and hindsight of having built it before.

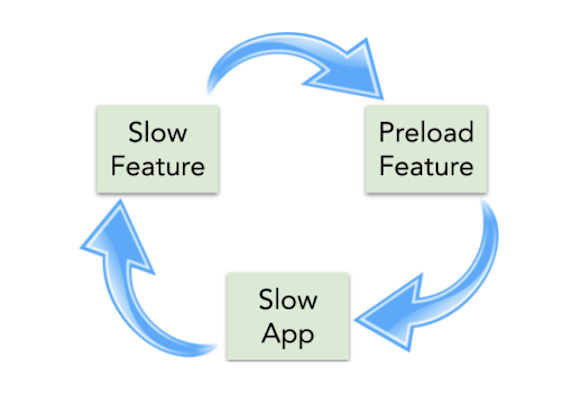

As Part 1 of this series highlights, over time, Snapchat grew to be a complex app with dozens of large and disjointed features. As our app grew, we saw each feature fighting for resources in a spiraling “tragedy of the commons," resulting in a sluggish experience across much of the app. Too many features were running too much work at app startup time so they could load a bit faster if and when they are used. While this may help a particular feature load faster, every other feature loads slower as a result.

For our rewrite to be a success, we knew we had to get ahead of this problem. If each feature loads fast in isolation, they can load fast when brought together. The big question we asked ourselves was: Can we design each feature to load quickly on-demand, without running a lot of initialization and preloading?

With these guidelines in mind, we set out to build our MVP -- in this case, a Minimum Viable Platform. For a feature to load quickly while also loading on-demand, the data and resources required to power it must be nearly ready for display, available on disk and not behind a network request with unknowable latency. This led us to an overall architecture with a few key tenants:

Features are modularized and isolated from one another.

Within a feature, also separate data synchronization services from UI and business logic.

Provide a limited number of tightly controlled entry points for both data synchronization and UI presentation.

Different features have different needs for fetching their data. For example, messaging data is primarily push-driven, where most data synchronization can run naturally in the background. On the other hand, Discover content refreshes regularly and needs to be fetched closer to when it is displayed. But in both cases, strictly separating data synchronization from UI presentation provides a clean architecture that allows each piece to be iterated and optimized separately, and to be run in concert with other features competing for similar resources.

Since our major features have different designs, we did not mandate a particular architecture pattern, be it MVVM, MVI, MVC, or similar acronym. However, the separation of data and UI naturally lends itself to a pattern of unidirectional data flow coupled with immutable ViewModels, and we built up a platform foundation to help make this easy and clean for a wide variety of features.

This was also an opportunity to modernize our stack from top to bottom to make it last for many years to come. The foundation is built from several frameworks commonly used in the Android community, as well as several solutions we built in-house to meet Snapchat’s unique demands, each integrated with attribution from our Attributed Everything initiative. The following are some of the major design choices we made, and a glimpse into what has worked well, and where we still have some opportunities ahead of us.

To align with our goals of building a modular app composed of distinct features, We rely on dependency injection to provide dependencies across module boundaries, powered by Dagger2. Our initial dependency graph was designed around the Dagger-Android extension library, which organizes fragments as subcomponents of a parent Activity component.

As we went on, it became clear that this pattern doesn’t scale well with a large codebase, and did not meet all of the objectives of our rewrite. Any dependency used by more than one component landed in the Application or Activity component, resulting in massive generated classes for each, and moreover we could not support dynamic features with a subcomponent hierarchy.

Since our initial launch, we have been migrating to a “Component Graph” architecture, built with component dependencies rather than nested subcomponents, which can be composed more dynamically. This also simplifies our sample apps, which can foster more rapid development and debugging, but only if the boilerplate overhead is kept to a minimum, as our new graph allows. The in-place migration was a major challenge, and we developed Dagger Browser to help gain insights of our graph structure to help us on our journey.

Navigation is a core need of any complex app, and Snapchat’s unique user experience adds a number of challenges, which led us to develop our own navigation framework. For example, in our old codebase, our main page navigation was a heavily modified ViewPager. While we could control the order, a ViewPager still needs to load neighboring pages before they can be swiped into view. A key architecture tenant in our rebuild was to be both modular and fast, preferring to do work on-demand rather than rampant feature preloading, and a navigation framework is key for managing this behavior.

Deck was designed with performance and flexibility in mind. The overall application layout is specified by a few lines of code. Pages can be configured to load completely on-demand or from a cache, prepared off-screen. We prefer on-demand loading whenever possible, to avoid resource contention while other features are in use. If a screen loads slowly, we try and fix the root cause rather than simply moving work to run in the background-- a slippery slope we were all too familiar with in our legacy codebase.

Beyond our homepage navigation, Deck handles the presentation of our dialogs and popups, fragment transitions, back stack behavior, and provides hooks to observe the numerous state transitions encountered as a user navigates the app.

Deck also comes fully instrumented to help debug the performance and correctness of each feature. A page is programmatically attributed to a feature team allowing, making it easy to log a page’s latency and scrolling performance metrics. While we can’t completely automate a page’s latency due to the asynchronous nature of a page’s “load”, a single method call is enough to provide this metric, including both “cold” and “warm” page starts which have different performance characteristics.

RxJava was one of the biggest paradigm shifts for our team. It is powerful and flexible enough to handle most of our concurrency needs, and provides a natural framework for unidirectional data flow, which also helps reduce the tendency to run superfluous work on the main thread.

RxJava brought a more uniform platform for handling concurrency, but with a high learning curve across the team. We spent a lot of effort internally to establish good patterns and help our team understand what RxJava was doing under the hood by way of regular tech talks, documentation, and a dedicated Slack channel. We also put lint rules in place to watch for common issues such as leaked disposables or dangerous “blockingGet()” calls, which can be prone to deadlocking.

To help with our adoption of RxJava, we also built up a framework to:

Support scoped tasks, to avoid leaky disposables

Provide attributed Schedulers, in place of RxJava’s defaults (Schedulers.io() etc)

Use this attribution in performance traces and crash reports, to help route issues to the underlying code and its team

Retrofit enforces the right boundary for defining a network api, with all request and encoding parameters specified in an interface. This makes it impossible to intermix business logic with the endpoint specification-- an issue that had grown pervasive in our legacy codebase.

To fit with our modularization goals, we create “HttpInterface” implementations for any modules that issue http calls, and a common HttpServiceFactory to materialize them, either against our production service, developer instance, or testing environment:

Our server infrastructure is large and diverse, and was also undergoing its own transformation to Service Mesh. To avoid spiraling complexity, we minimized changes to our backend needed to support our client rewrite, sometimes resulting in unique encoding needs. We could abstract these differences behind Retrofit Converters, creating Json and Protobuf requests with several different encoding schemes and http headers.

Having Retrofit as a way to define an http api also means we can push changes to all features from one managed networking client. For example, we had observed better connectivity results using Cronet in place of OkHttp. While Retrofit is tightly integrated with OkHttp, we managed to bridge Retrofit and Cronet using a chain of filters built on top OkHttp’s Interceptors, preserving the clean Retrofit api without giving up the performance of Cronet.

The database layer became one of the most significant performance bottlenecks in our legacy codebase, so we wanted to be sure to get it right this time around. After evaluating a number of options, we narrowed our choice down to Room and SQLDelight. While it was nearly a toss-up between the two, we were drawn to SQLDelight’s model of pure SQL source files. We felt it was both a cleaner abstraction, and also might better lend itself towards multi-platform development down the road, potentially reusing a critical layer of our new codebase on other platforms. SQLDelight’s programming model also helps to avoid queries with oversized projections (“SELECT *”), which had resulted in performance issues in the past.

With SQLDelight, our SQL queries become observable streams of immutable data models, a perfect fit for our architecture goals. Combined with other data sources using reactive operators, we have ViewModels ready for display. When a network response or other change updates the datastore, our ViewModels and Views refresh automatically.

Even with SQLDelight in place, there’s still a lot to consider in managing a datastore. How will the app handle concurrent writes? How can we attribute disk usage and slow queries? How should we handle errors like low disk or failed upgrades? How can we detect slowdowns caused by database queuing? To address these, we designed a “DbManager” abstraction to interface with a SQLDelight database, which now powers several databases across our app.

Loading a feature purely on-demand means it needs to load fast. And with Snapchat’s swipe-heavy navigation, it also means main-thread work must be kept to a minimum.

Most of our screens apart from the camera are centered around a scrolling list of content backed by a RecyclerView. In our legacy codebase, our RecyclerViews were managed differently by each feature, leading to performance-killing anti-patterns and business logic interspersed with our UI code.

We built a library called Recycling Center to address the shortcomings. Recycling Center provides a RecyclerView Adapter powered by RxJava’s Observables, which drives the pipeline from data models, to ViewModels, to Views. View binding logic that maps a ViewModel to its View is the only code allowed to run on the main thread.

A Recycling Center adapter is section-oriented, so screens can be composed of a number of different components, each with their own data pipeline. This also allows us to reuse featurettes across different screens without duplicating any code.

Standardizing around Recycling Center allows us to monitor UI performance across features, by embedding our attribution framework. Teams have access to a dashboard of latency and jank metrics almost for free, which we use to find common performance pitfalls and opportunities.

Kotlin had been officially supported by the Android team a few months before our rewrite effort began. We included it as a core part of our stack for its null safety, expressiveness, and reduced boilerplate in dealing with common tasks. Because of the interoperability between Java and Kotlin, and because we planned to reuse as much of our existing code as was reasonable, we did not mandate Kotlin at any point. But it quickly became the preferred language for most developers on our team, with almost all new Android code being written in Kotlin.

The biggest shortcoming of Kotlin for us was the impact on our build speed. In particular Kotlin’s annotation processing step, which we rely on mostly for Dagger2, added minutes to our builds. To mitigate this, we isolated most of our Dagger bindings to their own modules, and restrict them to be written in Java.

While our overall apk size decreased significantly after we launched our rewrite, we found that the nullability protections that drew us to the language were also adding a lot of overhead to our production apk. After adding a few proguard rules to remove the Kotlin intrinsics, we shed over 750kb from our apk, and improved cold-start time by as much as 5%.

If you find yourself at the foot of a major app rewrite, it’s worth spending meaningful time designing and building your core architecture before onboarding features. Technologies and best-practices have changed since your team last started fresh, and the patterns you choose will be repeated as your codebase grows. Once you have confidence in your foundation, onboarding features will be easier and result in a higher quality and more scalable codebase.

For more details about our rewrite, see our Droidcon ‘18 talk, Building for the Future at Snapchat