Building a Spark-Powered Platform for ML Data Needs at Snap

The Machine Learning (ML) community is pushing the boundaries of innovation, but this rapid advancement brings unique and significant challenges for data platforms. Standard solutions often fall short, leaving ML practitioners grappling with infrastructure complexities instead of focusing on their core models and insights. This post explores these challenges, why Apache Spark remains a cornerstone for scalable data processing, and how we're building a curated platform "Prism," to empower our ML teams.

Beyond Standard Spark: Why ML Demands a Different Kind of Data Platform

The Unique Demands of ML Data Processing

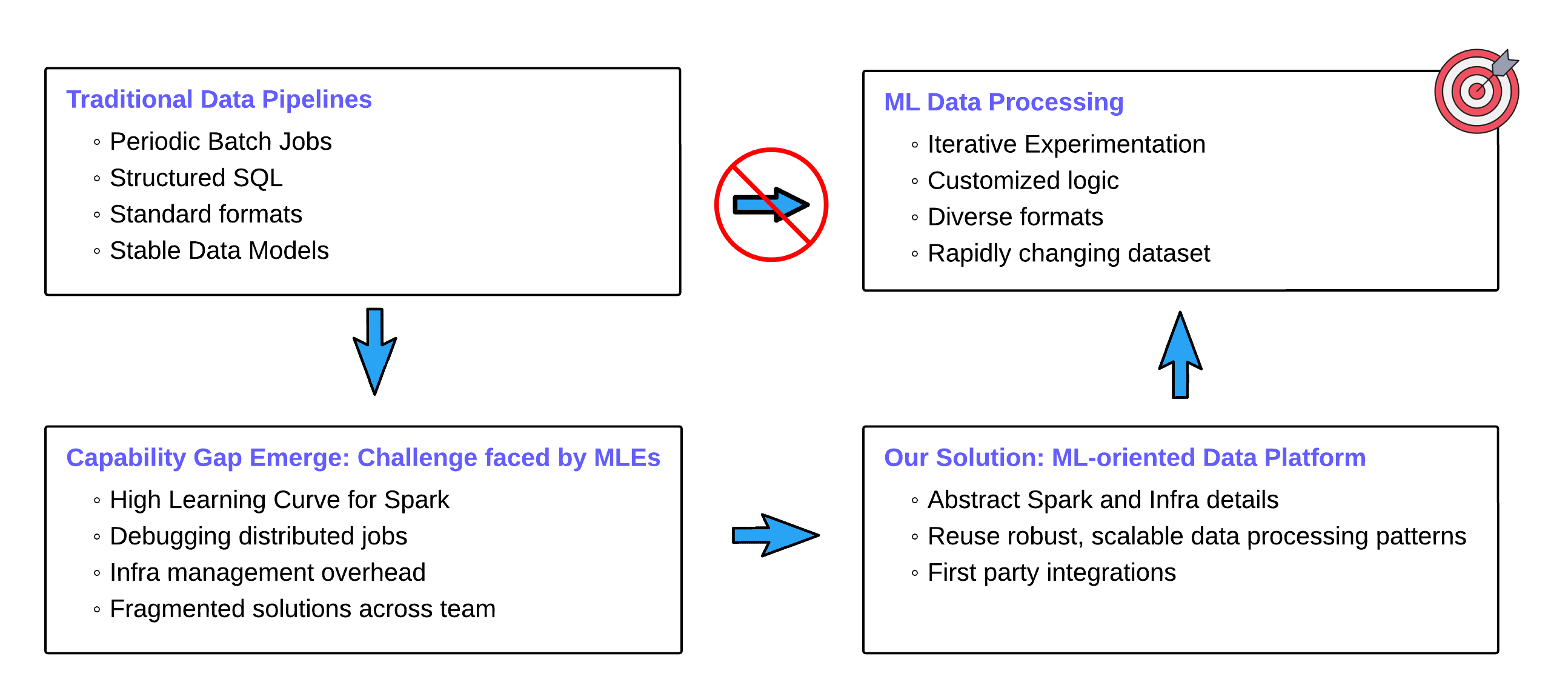

Apache Spark has long been a backbone of Snap’s analytical data infrastructure, powering robust, scalable pipelines for reporting, dashboarding, and batch data processing. However, as our machine learning initiatives have expanded, we’ve found that Spark alone isn’t enough to meet the evolving needs of ML development. ML data pipelines introduce a fundamentally different set of challenges:

Iterative Experimentation at Scale: Unlike traditional analytics, ML development is inherently iterative. Modelers constantly experiment with new ideas, trying different data slices, feature definitions, or preprocessing logic. Each experiment may require regenerating training datasets from massive raw inputs over and over again. This loop of experimentation pushes data infrastructure to sustain high-throughput workloads repeatedly, not just on a fixed schedule.

Flexibility during Development, Stability in Production: Unlike more stable analytics pipelines, ML workflows often linger in an extended experimentation phase. Early ML development thrives on freedom, including ad hoc data access, fast turnaround, and flexible schemas. But once a model moves to production, the same pipeline must become efficient, scalable, and observable. Supporting both phases smoothly requires tooling that evolves with the workflow.

Diverse Data Formats: ML teams use what’s best for the job: TFRecord for TensorFlow, Protobuf for gPRC-based serving, JSON for lightweight experimentation, etc. Forcing everything through a single format (like Parquet) would create unnecessary friction and bottlenecks. Tooling needs to meet users where they are.

Spark is powerful and battle-tested for large-scale data processing, especially well-suited for SQL-based transformations and stable batch workflows. While it serves many use cases well, it can be less intuitive for ML practitioners. As ML data processing becomes more complex and bespoke, Spark’s steep learning curve and lack of beginner-friendly abstractions can slow teams down. This is especially true during the early stages of experimentation when flexibility, speed, and ease of use matter most.

Consider the life of an ML engineer without supportive abstractions:

They must understand distributed systems' internals to write efficient Spark jobs.

They often reimplement boilerplate data prep logic already solved elsewhere.

They manage infrastructure: clusters, dependencies, upgrades, on their own.

Debugging distributed job failures becomes a time sink with little payoff.

All of this is in addition to the tools they already need to master: TensorFlow or PyTorch, Kubeflow, notebooks, experiment tracking, and more. Spark is essential for scale, but it’s not the heart of their job. ML engineers want to focus on experiments not infrastructure.

At Snap, we have built an ML-optimized data platform that bridges the above gap. Our goal is to abstract away infrastructure, standardize common patterns, and integrate tightly with Snap’s internal ecosystem, so ML engineers can:

Define what data they need, not how to compute it

Iterate faster with less glue code and boilerplate

Reuse robust, secure, and scalable data processing patterns

Focus on model innovation and business logic

With this foundation, ML development becomes faster, more consistent, and more enjoyable, freeing engineers to do what they do best: build great models that drive impact.

Recap

Introducing Prism: Connecting User Needs to Platform Capabilities

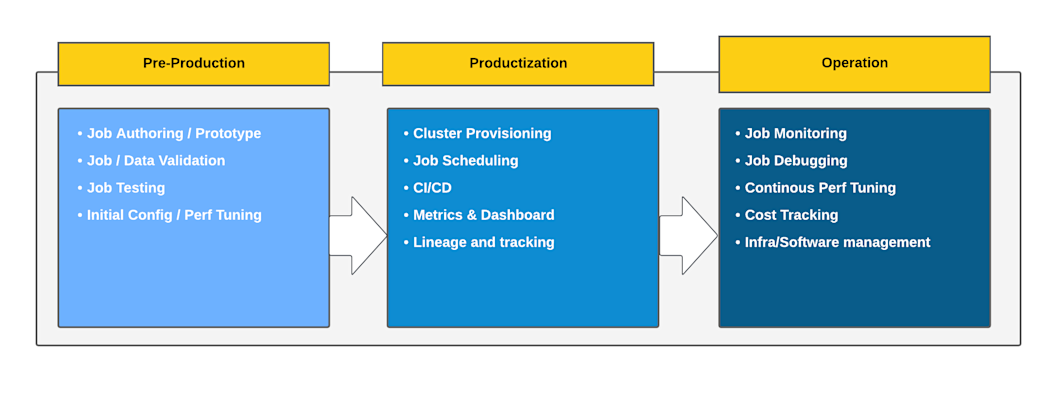

Prism is a unified Spark platform that streamlines the entire job lifecycle: from authoring to production and post-production. It abstracts Spark complexities with config-driven templates, an intuitive UI, and a powerful control plane, enabling fast, reliable development for data and ML engineers. With built-in metrics, cost tracking, and autotuning, Prism delivers a serverless-like experience while supporting scalable, efficient operations. This document outlines how Prism empowers users at every stage and highlights the key components behind its architecture.

Pre-production: Users can skip low-level Spark code by using Prism’s config-based templates and UI to quickly build and test jobs. Predefined profiles cover most use cases, making it easy to get started and fine-tune performance.

Productization: Prism handles cluster setup, scaling, and teardown via a unified API, giving users a serverless-like experience. Built-in dashboards, metrics, and metadata tracking ensure visibility. It also supports seamless scheduling with tools like Airflow and Kubeflow.

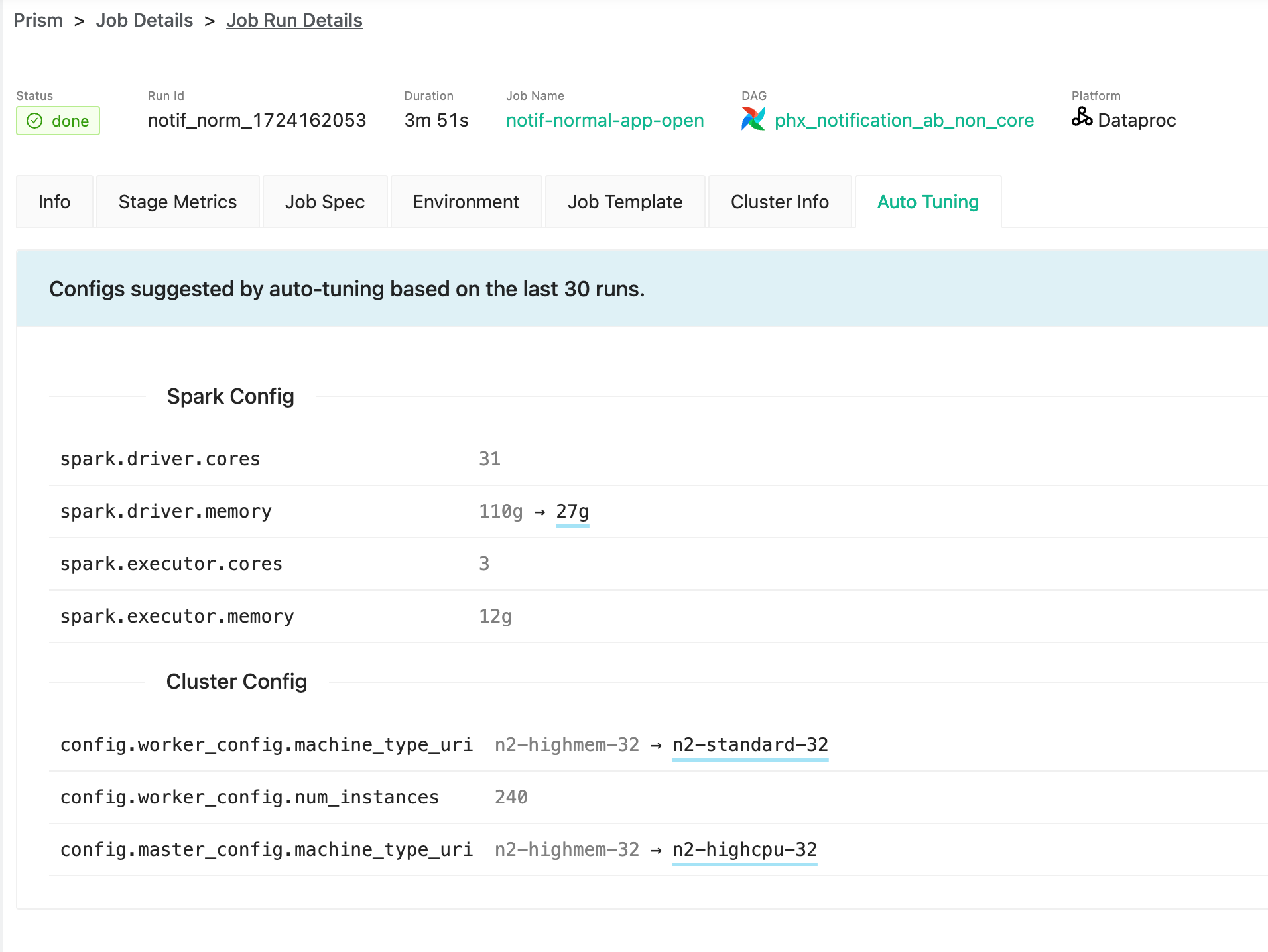

Post-production: Users get full monitoring, alerts, and debugging tools in one place. Prism stores job metrics to offer tuning advice and supports autotuning for performance and cost. It also tracks job costs and manages infra updates automatically.

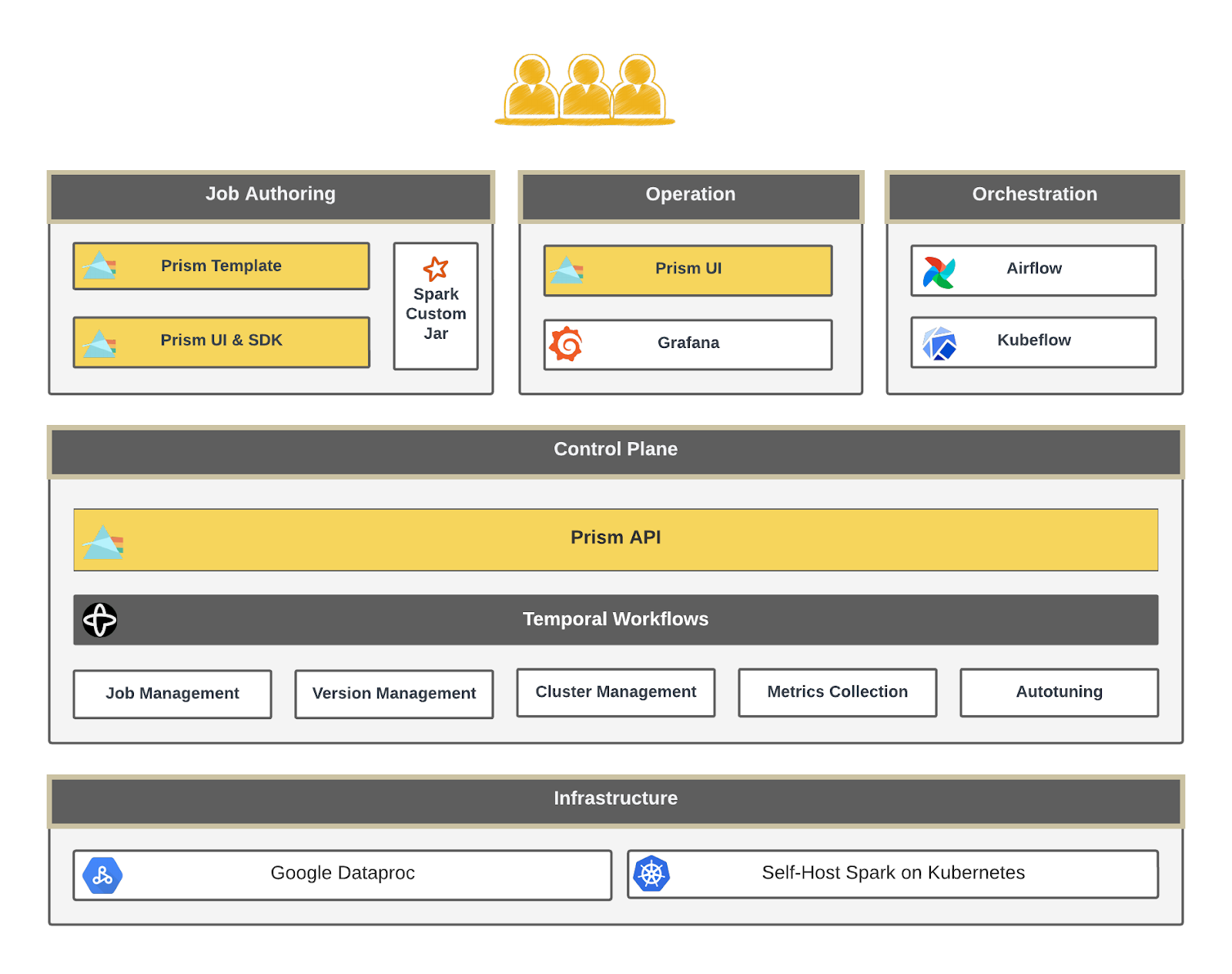

Architecture Overview

To streamline the Spark user experience, we centered Prism around two primary interfaces: the Prism UI and a client SDK, through which users can author, monitor, debug, and tune their Spark applications. To simplify job authoring, we built Prism Template, a homegrown framework that provides structured, reusable building blocks for defining Spark jobs.

All user requests flow through a centralized API layer, which is processed by the Prism Control Plane. The control plane delegates complex orchestration tasks to a scalable, highly customizable workflow system built on Temporal. This separation of concerns ensures reliability and flexibility at scale.

Finally, the system integrates with internal services for metrics, cost tracking, and orchestration, so that users can focus on building business logic without having to manage infrastructure overhead.

Prism UI Console - One stop shop for Spark users

The launch of the Prism UI Console came in response to ongoing friction users faced when working with Spark. We originally provided an infra team owned library and a CLI to help standardize job submission and simplify integration. While these tools improved consistency, we continued to hear feedback from ML and data engineers about the difficulty of bootstrapping, debugging, and observing Spark jobs. Users had to juggle a fragmented toolset, including Spark UI, History Server, HDFS, and cloud-specific interfaces like Dataproc Console and Stackdriver, alongside internal systems for metrics, billing, and cost tracking.

To address these pain points, we invested in building the Prism UI: a single interface that consolidates these touchpoints into one cohesive experience. By reducing tool fragmentation, we’ve lowered the barrier to entry, improved development velocity, and created clearer lines of collaboration between platform teams and users. It also sets the stage for targeted improvements in usability and observability going forward.

Key Capabilities of Prism UI Console

Unified Job Search: A central search page allows users to find jobs and job runs by job name, namespace, cluster ID, and other attributes.

Metadata Storage: Job and cluster metadata, including configurations, metrics, and lineage, are persisted in a scalable backend, enabling rich analytics and auditability.

Logical Job Grouping for Trend Analysis: Jobs are grouped by orchestration task IDs (e.g., Airflow or Kubeflow), supporting historical analysis of runtime, cost, and resource utilization, which are key elements for cost visibility and performance tuning.

Integrated Real-Time Cost Estimation: Users receive live cost estimates through integration with our internal billing systems. This feedback loop is especially valuable during large-scale model experimentation.

One-Click Utilities and Deep Links: Power features like job cloning and deep links to relevant tools (e.g., Spark UI, logs, output tables) accelerate iteration and troubleshooting.

Integrated Job Authoring Experience: Users can author, configure, and launch Spark jobs directly within the UI:

Source/Sink Integration: Native connectors for Iceberg, TFRecords, Parquet, and BigQuery with support for uncompression, sampling, and advanced config options.

SQL Editor with Autocomplete: Built-in metastore integration enables schema-aware autocomplete and syntax highlighting.

Preconfigured Job Profiles: Curated configurations built by Spark experts eliminate the need for manual tuning during early experimentation.

Smart Output Navigation: Jobs targeting Iceberg tables link directly to our Lakehouse UI for exploration and querying via Trino.

Template-Based Authoring: Support for low-code job creation through Prism Templates (covered in below section), enabling rapid development without deep Spark knowledge.

Since launch, Prism UI has earned strong praise from developers, especially within ML organizations who value the simplicity and speed it brings to working with Spark. For teams scaling Spark usage in complex environments, investing in a unified interface not only removes friction but also builds a strong foundation for sustainable platform evolution.

Authoring performant and cost-efficient Spark jobs has consistently been a challenge. In the native Spark ecosystem, the same workload can be implemented in many different ways which would vary widely in structure, resource usage, and maintainability. This flexibility, while powerful, often leads to inconsistent patterns across teams, making jobs harder to debug, optimize, and support at scale, even for platform teams.

To address this, we introduced a structured, configuration-driven approach to Spark job development based on reusable modules and standardized YAML definitions. This helped reduce implementation variability. In addition, it made MLE’s life easier to iterate quickly during experimentation, while providing a clear path to production. With Spark configurations centralized and versioned, teams can tune jobs for scalability and efficiency as part of the productization step without overhauling their application logic.

Simplified Job Authoring: The platform enables users to define jobs using a YAML file, abstracting away Spark internals. It supports a seamless experience from local development and testing to staging and production deployment.

High-Quality, Reusable Components: Prism offers a collection of reliable, out-of-the-box modules, ranging from low-level tasks like Iceberg ingestion to high-level operations like feature extraction. These reusable components reduce user-side errors and operational overhead by ensuring consistency and correctness across spark jobs.

Integrated Observability and Tooling: The platform provides built-in metrics, logging, and operational tools by owning the job bootstrap process and core application modules.

Managed Versioning: All job artifacts, including definition YAML, driver JARs, and plugin JARs, are centrally versioned and managed, ensuring safe upgrades and consistent behavior across environments.

Customizable Templates: Users can leverage pre-built templates with user-defined specifications to accelerate development while maintaining flexibility.

Here we provide an example Prism Template YAML snippet that chained two modules in a single Spark job: one for creating a sequence column from flattened records, one for ingesting the output dataframe to an Iceberg table.

Prism Control Plane - Reliable Job Management at Scale

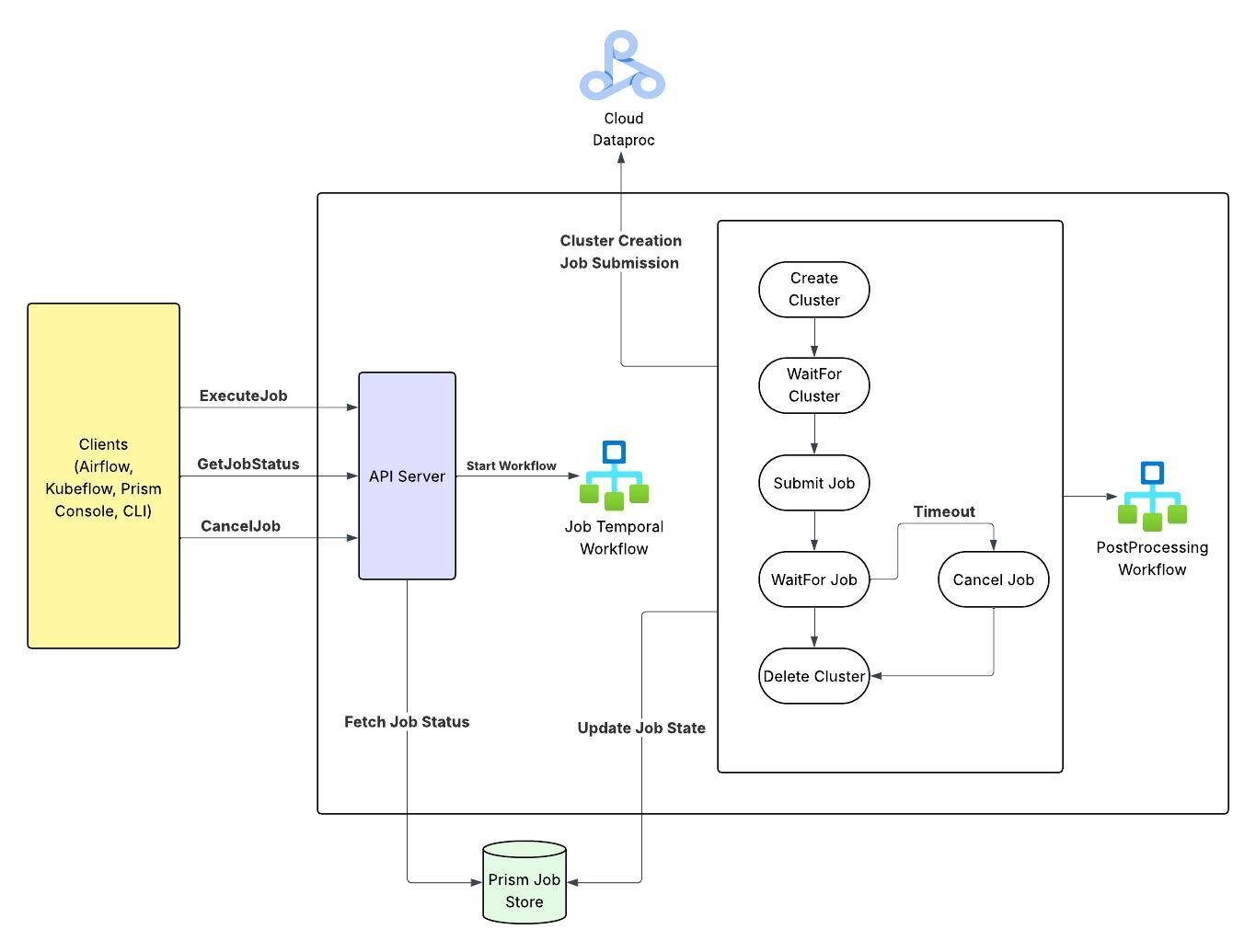

Our initial control plane was built as a lightweight service that acted primarily as a proxy to Dataproc APIs, with some basic abstractions like permission management. Cluster and job management were handled through separate APIs, and we relied on external clients, like our Airflow operator, to orchestrate the full lifecycle. This meant users had to explicitly manage clusters, including provisioning, teardown, and failure handling, which added operational complexity and reduced reliability.

As usage grew and feedback accumulated, it became clear that this model didn’t scale well. Managing infrastructure manually was both error-prone and a distraction from core ML and data engineering work. In response, we redesigned our control plane to offer a simplified, serverless-like experience. The new API abstracts away the underlying cluster lifecycle and presents a single job submission endpoint, handling orchestration internally.

To make this possible, we introduced a dedicated workflow management system built on Temporal. This system offloads execution tasks, such as cluster provisioning, job submission, status tracking, cancellation, and retries, from the core control plane. The architecture now separates responsibilities cleanly: the control plane manages job metadata and configuration, while the workflow engine handles runtime orchestration.

This shift significantly improved system reliability and scalability, while also laying the foundation for higher-level capabilities like autotuning and intelligent retries.

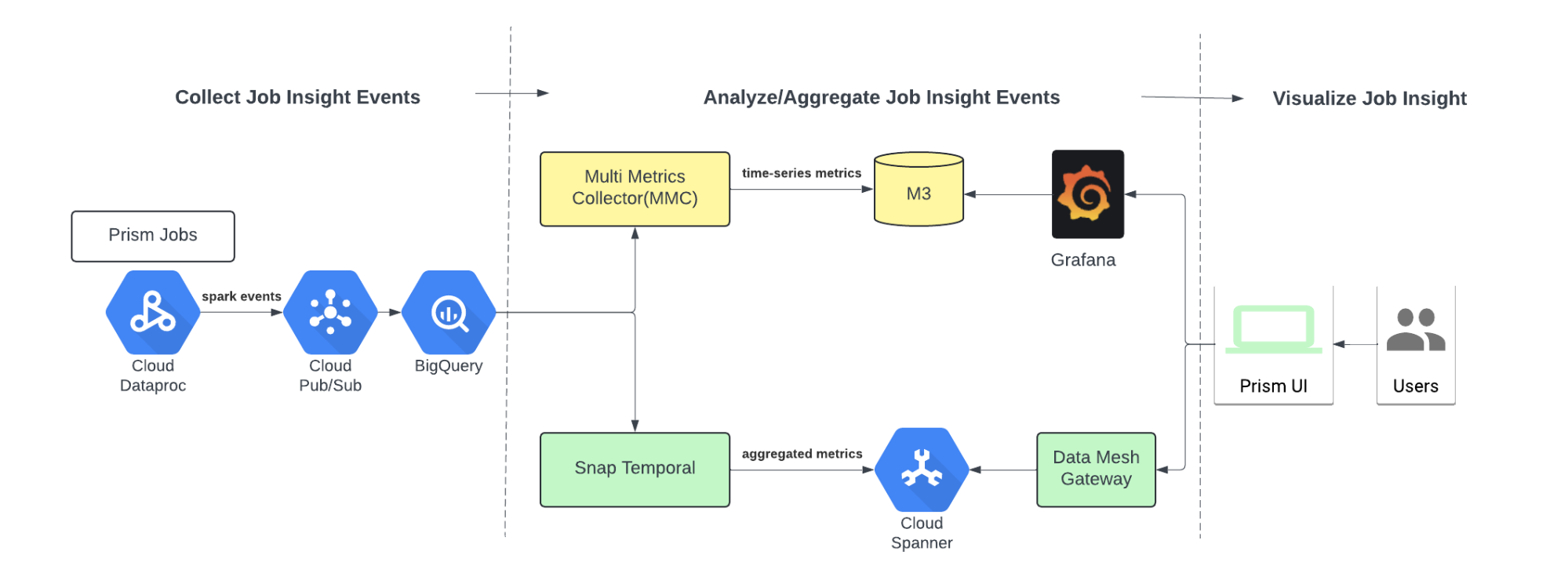

Prism Metrics System & Autotuning - Powering Visibility and Smarter Defaults

Out of the box, Spark and Dataproc expose a large volume of operational metrics. However, these metrics are often fragmented, inconsistent, and difficult to consume, especially when trying to build a reliable user interface or power programmatic features like autoscaling and intelligent retries.

Several challenges emerged early on:

Metrics exist at multiple levels (job, stage, task, cluster), but lack a cohesive structure for correlation or aggregation.

Most metrics weren’t designed for time-series analysis, limiting their usefulness for monitoring and debugging.

Inconsistent TTLs, naming conventions, and formats across metric data sources made integration brittle.

The raw metrics were not well-suited for use in automation, such as autotuning or retry policies.

To address this, we built a dedicated end-to-end metrics system tailored for Spark workloads. It ingests and standardizes key signals from jobs, clusters, and dependent infrastructure, and stores them in a centralized Spanner database for durability and consistency. This allowed us to surface meaningful insights through the Prism UI Console and enabled advanced features like autoscaling and intelligent retry mechanisms.

This investment became a foundational part of the platform, developed alongside the UI console to ensure tight integration and a unified experience for both manual observability and automated optimization.

Adoption Journey: How Teams Engage with Prism

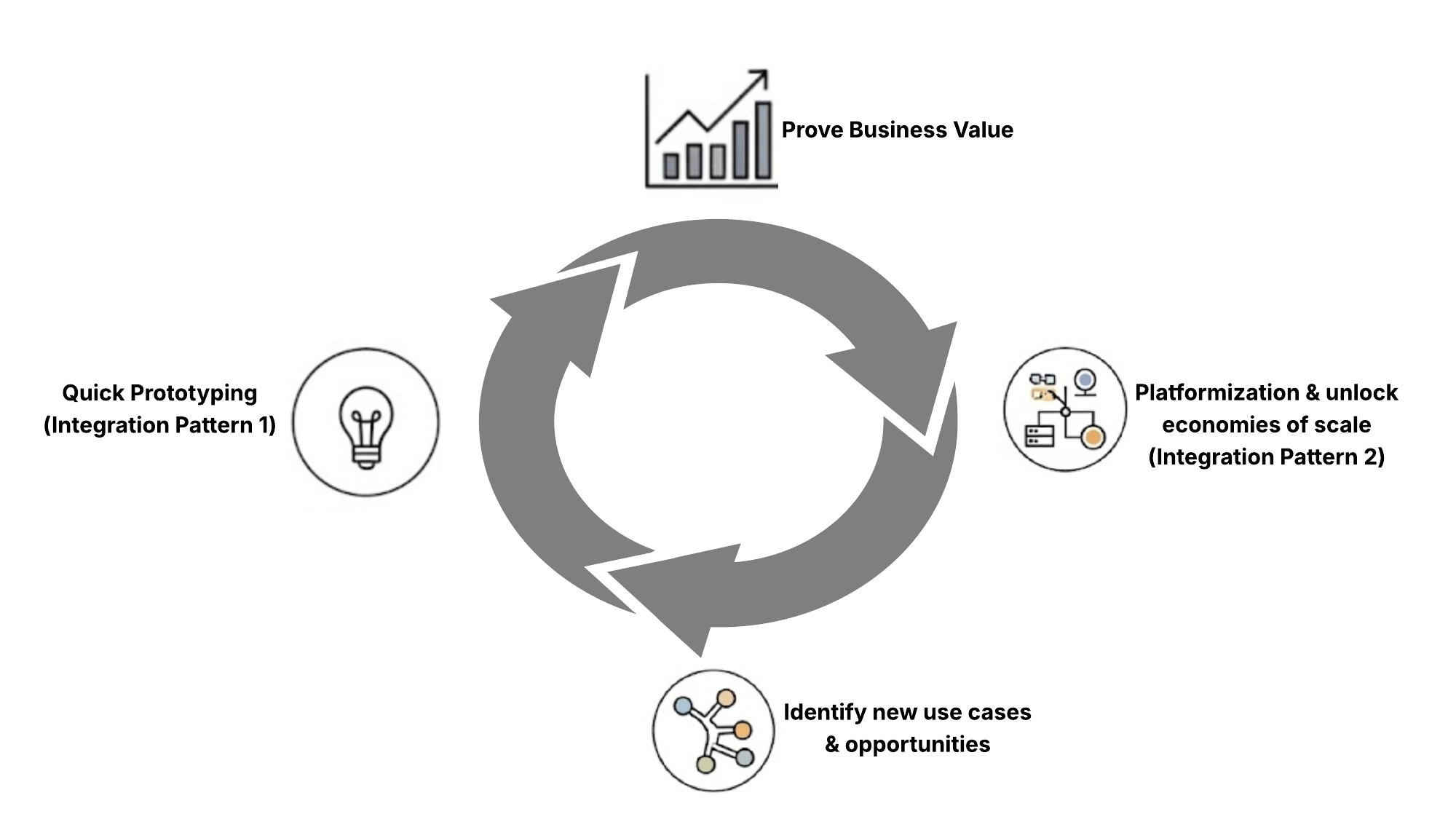

Platform impact is best measured by adoption-and Prism’s growth tells a clear story. We are encouraged to observe Prism's daily job metrics growing over the past two years, from initial single-digit figures to several thousand, with peak periods handling over 10,000 jobs per day. We believe this increasing usage is supported by Prism's flexible design, which aims to accommodate the varied Spark needs across Snap teams. We’ve seen two main ways teams engage with Prism:

Direct Product Adoption by Advanced Teams: Teams with complex Spark workloads, which usually require custom logic, large-scale joins, tight data model integration, use Prism directly. It gives them full control to build and operate powerful data pipelines.

Integration into Internal Platforms: Other teams interact with Spark indirectly via internal tools for ML data prep, feature engineering, and experimentation. These tools embed Prism’s core capabilities, further abstracting away the complexity. This extends Prism's reach by offering abstracted, domain-specific Spark capabilities to their own users, simplifying access without requiring deep Spark expertise from everyone.

Supporting both direct and embedded patterns has been key to Prism’s success. It enables fast experimentation, reusable solutions, and a cycle of continuous innovation across the company.

What’s Next: Growing Impact and Strengthening Capabilities

Prism has already made a strong impact, but we’re just getting started. Looking ahead, we’re focused on four main areas:

Supercharging ML Development with LLMs: We’re integrating Large Language Models (LLMs) into Prism to help ML engineers work smarter and faster. From auto-generating job templates to suggesting performance tweaks and surfacing debugging insights, LLMs will boost development velocity and reduce time spent on repetitive tasks.

Bringing Batch and Streaming Under One Roof: We’re unifying the user experience across Spark batch and Flink streaming workloads. With a single, streamlined interface for authoring, monitoring, and managing pipelines, regardless of processing mode, teams will spend less time switching contexts and more time delivering value.

Pushing Spark Performance Further: Performance is key, so we’re experimenting with advanced techniques like GPU acceleration and smarter JVM tuning. We’re also taking steps to self-host core parts of our Spark setup, giving us more control and the ability to optimize precisely for our needs.

Building a Seamless ML Data Hub: We’re making Prism the central hub for all ML data processing. By deepening integration with key lifecycle tools, including training data generation, feature engineering, and data quality management, teams can prepare, refine, and validate data within a unified environment. And with seamless connections to downstream model training and serving platforms, Prism makes it easier to go from raw data to production-ready models with speed and confidence.

We’re just getting started, and 2025 is shaping up to be an exciting year for Prism. As we take on bigger challenges and scale the platform, we’re looking for thoughtful, curious builders to join us. If you’re excited by the idea of shaping ML infrastructure, working with big data, or solving hard performance problems, we’d love to connect.